We’ve finally released Quarkus, a project the team have been working on for the past 9 months. Our internal project name for the work was Protean because, as you’ll see, our aim is for it to be incredibly versatile and flexible for cloud development and deployment. There are other articles and presentations to come which will go into more detail but I wanted to give an overview of what we’re trying to achieve with Quarkus. (Yes, naming is the hardest part of computer science.)

In many ways it’s possible to trace the heritage of Quarkus back to around 2010/2011 when we started to work on improvements to JBossAS with version 6 and subsequently WildFly. The aim of that was to ensure EAP could run well in the first release of OpenShift as well as on constrained devices (aka IoT); maybe some of the readers were present at the JBossWorld keynote where we demonstrated running on plug devices, as Raspberry Pis were just about to break onto the world. The cloud focus imposed similar requirements on us as IoT: limited processing power some of the time, reduced memory availability, storage restrictions etc. Generally our thoughts were that if we could get projects and services which ran well on a constrained device then they’d stand a good chance of running well on the cloud.

Over the following years we’ve had many OpenShift releases, further improvements in WildFly and other projects, our entire xPaaS efforts to bring our core capabilities such as transactions, messaging, data caching etc. as services on OpenShift. In that time the entire cloud space has seen a number of paradigm shifts including the massive adoption of Linux containers and Kubernetes. In the middleware space we’ve tuned our projects and services to work well with both and outlined the vision for where we are going and why. With our OpenJDK team continuing to collaborate upstream we’ve also been making changes to Java to ensure it runs well in those environments as well.

However, despite Red Hat/JBoss and other vendors and communities showing that Java works well in the cloud, doubts remain and there are some often cited causes for concern. The JVM represents a huge and impressive amount of work by countless developers from a number of vendors over two decades. Despite the fact Java began life aimed at constrained devices, it rapidly evolved into a world where the assumptions are quite different to the cloud today, e.g., able to consume a lot of memory, requirements to dynamically update the running application/environment and other run-time optimisations. It's important to remember that Java remains the number 1 programming language for enterprise developers working on the backend and many organisations have invested a lot of time and money into their Java-based developer organisations and software because it has dominated the software landscape for so many years. Being able to continue to leverage those things as they transition to the cloud is therefore incredibly important for this huge community. But concerns about Java (performance, runtime memory footprint, boot time etc.) have caused some to re-evaluate their investment with Java and consider some of the newer languages, despite the fact many of those languages don’t yet have the rich ecosystem of tools, utilities etc. which have been built up over the years by the Java community.

Therefore, being able to continue to leverage this Java community and ensure that our own communities and customers can do so too, has been one of the important reasons behind what we’ve been doing with Quarkus and what you'll hear about soon. As we also pointed out when we kicked off xPaaS, we also want to take advantage of as much of our own community efforts as possible because things such as WildFly, Hibernate, Apache ActiveMQ, Apache Camel, Infinispan, Narayana, Drools, jBPM, Eclipse Vert.x and many others are mature, category leaders which we believe are still relevant in the cloud space. We’d prefer to enable our communities and customers to be able to continue to rely on them rather than having to start from scratch.

OK so what is Quarkus and how does any of what I’ve said (The Context above) related to it? Well broadly speaking Quarkus work falls into two categories:

- Leveraging OpenJDK leadership and cloud improvements, as well as the Graal and Substrate projects, it provides a way to develop Kubernetes native Java applications and microservices. As you will see from other articles, our new website, or even the quickstarts, this covers a range of things. For example, in a Kubernetes environment the Linux container images are immutable, so dynamically modifying the running application, as is possible with approaches similar to those used within the WildFly microcontainer, is typically not necessary. Furthermore, despite the fact that Write Once Run Anywhere is important for Java developers off the cloud, it’s less important when running in Kubernetes where Linux dominates. If you consider some of these constraints as possible advantages when you are targeting the cloud, heavily optimising your Java application at build-time, including potentially compiling Java to a native executable, offer some possibilities for improvement in the cloud compared to traditional Java. For example, if you can compare compiled Java with its bytecode cousin, the memory utilisation can be significantly less and the boot time can likewise be considerably quicker. And if you, as a developer, need to make changes to your application then you do so in the way all Kubernetes developers do today ... create a new container image and deploy it, as simple and quickly as that.

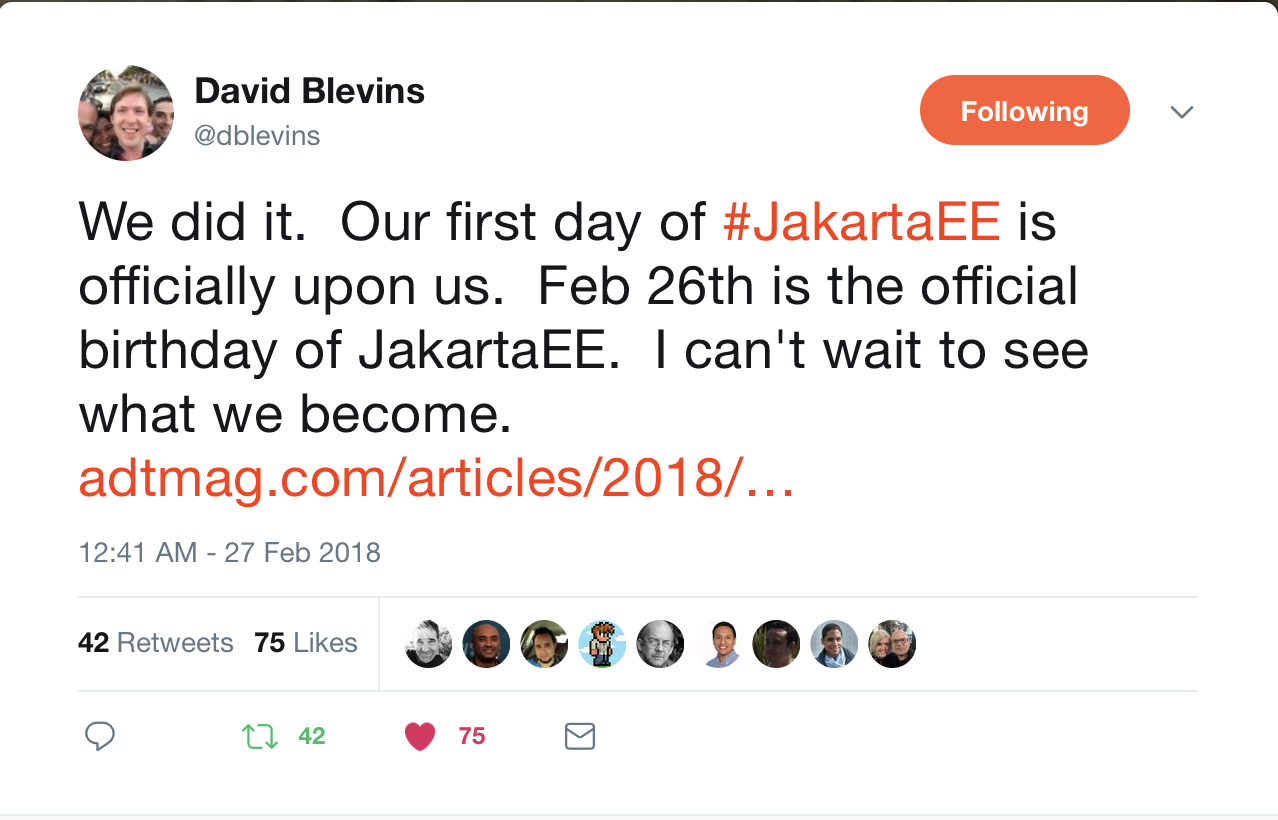

- Faster, smaller and more Kube-native Java based upon existing implementations is all well and good but we simply couldn’t stop there. Building on the work we’d been doing around xPaaS, as well as new cloud work such as Strimzi and OpenShift Cloud Functions, the team all agreed that we needed to define a prescriptive Kubernetes native environment. We want to build on and hopefully influence industry standards such as Eclipse MicroProfile and Eclipse Jakarta EE. We’re taking an opinionated view on things such as Knative (for our serverless work), asychronous reactive/event-driven microservices (yes, Eclipse Vert.x has a huge role to play) and generally anything that we feel makes Kubernetes-native Java development better for public, private and hybrid cloud.

I’ve only touched the surface of the work the team embarked upon last year. I hope to write some more about this over the coming months and maybe do some presentations with the developers. Come to Summit 2019 and hear from us first hand (check the agenda and search for Protean). Many of our products and projects either already are making the Quarkus journey or plan to join soon. I’ll be continuing to support this upstream and in our products as a key effort for Red Hat. I’m even hoping to be able to get back to contributing some more transactions related work when I get some spare time - the little I managed to do back when we kicked off the work was enjoyable.